- deploy webapp to web

- set up Jenkins and Nexus to actually deploy releases

- set up dns to point to each of docker instances

Category Archives: Implementation

license

Generating SideEffect Classes

There are at least three ways to create a SideEffect class (java file) that can be used as an option:

- http://foo.smoslt.com/generating-sideeffect-classes/ – a wizard

- same wizard as above, only run on your local box

- Copy and paste an existing TemplateSideEffect.java file

Any are OK, but if you are willing to do number 1 above, your work product can be automagically shared with others

more here later

Saturation vs MoreIsBetter Scoring for SideEffects

Imagine two different types of scoring scenarios

- Hours – number of man-hours it takes to complete a complex software creation schedule

- ScalesUp – feature of software that makes it scale to near infinite number of users

Both of these are actually Saturation based scoring models, but only theoretically. In practice, there is no hope of ever reducing the number of hours to zero, so really hours is always a MoreIsBetter model – the more efficient the process – in this case the least number of hours, the better.

- 2863 hours: good

- 2714 hours: better

But ScalesUp is like most other scoring aspects of creating software. It is a goal which is actually quite attainable in a very finite sense. Once you have built software which can and does deploy to near infinite scale, you are pretty much done with that. It works, and more, in this case is not better. You can’t improve much on perfection. This is the Saturation model, and that model can be easily represented as a 1-100 score, where 100 is the highest score.

- score of 0 – 0% complete

- score of 70 – 70% complete

- score of 99 – 99% complete

We can conclude then that each of these fits the opposite scoring model

- Hours: MoreIsBetter model

- ScalesUp: Saturation model

Scoring the Saturation Model

This is something that is not obvious until you start the process of scoring for Saturation in a specific score. It takes a different kind of thinking to score against a Saturation model when there are a number of different options that each contribute to a score.

Arbitrary Subtotals

When scoring for saturation, you know that the score can never exceed 100, but you also know that many different activities may be required to meet that goal. This might spread across several options, or in the case of Smoslt: SideEffects as Options

Let’s take an oversimplified example of scalesUp score:

- Scalable Software: software written to operate across as many machines as required

- Cloud Provider: relationship with a vendor to provide as many machines as required

- DevOps: deployment written to recognize and react to increased need

You can’t get to 100 without each of these aspects being complete, so really you have to be able to score each separately. The percentage of score allocated to each is arbitrary, that they should total to 100% is not arbitrary – it is a given.

- Scalable Software option: 25%

- Cloud Provider option: 17%

- DevOps option: 68%

Only now can you begin the process of scoring. For each of the above 3 options, you have the tasks of separately scoring completion against their respective target.

Saturation Based Scoring Within a SideEffect

To explore what it means to score a specific option that affects a saturation score, let’s look at the simpler option of Cloud Provider above. Note first, that this option may affect several different score types, including all of these below, most of which are saturation based scoring models.

- Saturation

- ScalesUp

- ScalesDown

- Durability

- ManagerSpeak

- FeedbackSpeed

- MoreIsBetter

- Hours

- POLR

- LongTerm

This breakdown may or may not be representative of what really belongs in Saturation based scoring, but let’s take one that is pretty clear – ScalesUp. This is because an app either scales up properly or it doesn’t. It can easily be measured and tested, and it is pretty obvious when it fails.

So now we know that within ScalesUp, repeating from above, each of these options contributes an arbitrarily apportioned part of this score:

- Scalable Software option: 25%

- Cloud Provider option: 17%

- DevOps option: 68%

If we look at only the CloudProvider option, or side effect, we then need to do these things

- Set the apportionment of the score at 17%

- As the necessary work is complete, increment score against that 17%

- When this work is complete, you have fully incremented this score by no more or no less than 17

Another Challenge of the Saturation Modeling: 100%

Saturation is based on an index of 0 to 100%. This sounds perfectly logical, and it can even be logical in implementations. Take ScalesUp for example. If you are using Cassandra for your persistence store and have the appropriate use case, at least for the persistence piece you are at 100%. Cassandra scales linearly right out of the box. Can’t get any closer to 100% than that. So if you have Cassandra in your mix, make your ScalesUp score 100%. Right?

Not so fast there, buddy. Let’s take another look.

Your project has a lot more pieces than just a persistence score. Each of these pieces can wreck a ScalesUp score. If your persistence score scales perfectly, but you have a web tier and a messaging tier too, and they don’t scale up well, then you’re not at 100% yet. So now you have to modify your scoring such that web tier an messaging tier and persistence store together add up to 100%. Is that a third each? Or does web tier get 50% and persistence store 40% and messaging tier get 10%. Good question.

code guidelines/notes

guidelines

- avoid anything that requires re-architecting later

- do anything that can set aside straightforward additions later

here is my baseline

- all projects are MJWA OSGi ready jars, but not running in OSGi container

- all my projects do not use carefully architected checked exceptions

- but rather just stupid RuntimeExceptions to fix later

- do not do real testing, but do use junit to just get the methods running

- do use neil ford’s composed method but only enough to keep my job easier, not religiously

here are my options

- foo

my baseline vs other baselines

- versus Marcos baseline

- versus Matt baseline

- versus ….

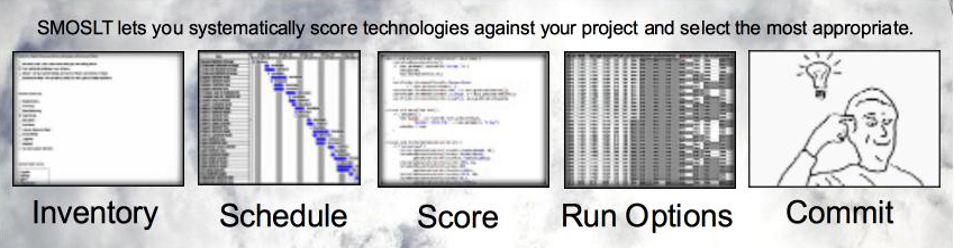

SMOSLT.main

Command Line Application for SMOSLT

Glossary

- PL: ProjectLibre

- [compliant]: ProjectLibre file which conforms to exact specifications expected for SMOSLT.stacker – see separate document

Features

- import a [compliant] PL file and run SMOSLT.assume against same

- something here about two files, one for baseline another for latest something

- maybe something here about narratives or options or generating

SMOSLT.stacker [compliant] specifications

- all baseline tasks

- no automated options (generated by SMOSLT)

- resources individually named, with exact group that belongs to

- all tasks assigned with either

- specified individual by exact name

- specified group(s) by exact name, and count

- comma delimited

Somewhere need to note that

cartoon of evaluating options process

from situation come up with type of … to look up prototype/template

copy template into my…

modify template to reflect situation

add resources to match task titles

add/modify predecessor relationships

add/modify resources to reflect situation

add/modify options modifiers to reflect situation

rerun to extend out into actual schedule

primary goal is allows you to not have to pick two

- keep things fluid

- not limiting visibility into options

- allow you to have less than complete information

SMOSLT.options – OrGrouping

“This or this or this option, but not more than one from this group”

What Or-Grouping is NOT:

SMOSLT, as it relates to software options, offers a way to evaluate where to commit your limited resources. Should I organize the build around a Continuous Integration server? Or commit those same resources, instead, to deploying my services to smaller linux container modules?

- Continuous Integration

- Docker Container Service Deployments

These concerns each require a commitment of resources, and I only have enough resources to do one, but neither do they overlap. I could do both, if I had enough resources.

What Or-Grouping IS:

If I decide to commit resources to Continuous Integration, I’m still not done with the comparison of options. That’s where or-grouping comes in. Consider these options for Continuous Integration servers:

- Jenkins

- Hudson

- Thoughtworks Go server

- Bamboo

I need to pick whichever one of these options makes the most sense for my organization.

I would never choose more than one of these, it’s an either/or choice. Pick one.

How Or-Grouping Fails in SMOSLT.options module:

The magic of SMOSLT.options is that, unlike it’s human operator, it can compare every combination of options given to it.

Yet this same feature, without or-grouping, has an unintended side effect. For example, it might cause Jenkins and Bamboo to be selected for comparison at the same time! Wrong! The human would know that you either use Jenkins or Bamboo to achieve Continuous Integration, but you would never use both in combination! SMOSLT.options has no way of knowing that, without or-grouping.

How To Use Or-Grouping:

Or-grouping is implemented via naming conventions. Consider again, the same list of candidates for SMOSLT.options to compare:

- Jenkins

- Hudson

- ThoughtworksGo

- Bamboo

To implement an Or-Group, we rename this same list as follows:

- Ci1-Jenkins

- Ci2-Hudson

- Ci3-ThoughtworksGo

- Ci4-Bamboo

SMOSLT.options module now knows to never evaluate any combination of two or more of these options at the same time. For example, using red bold to indicate selected options, SMOSLT.options would not evaluate the following combination:

- Ci1-Jenkins

- Ci2-Hudson

- Ci3-Thoughtworks Go server

- Ci4-Bamboo

Scoring and Inheritance with Or-Groups

Each of these CI server options is more alike, than they are different. Differences between Continuous Integration servers exist, but the big difference is not between them, but between using a CI server and not using a CI server. Again, the list, only this time the name of the java file that does the scoring.

- Ci1-Jenkins.java

- Ci2-Hudson.java

- Ci3-ThoughtworksGo.java

- Ci4-Bamboo.java

Scoring each of these means writing each of the above java class, and then copying and pasting the common scoring code into each, and changing whatever is unique after copying and pasting.

We all know the problems of maintaining copy-pasted code. Not good.

So instead, we refactor the above group to add a common super-class. Now the or-group class structure looks like this.

- Ci0-ContinuousIntegration.java

- Ci1-Jenkins.java – extends Ci0-ContinuousIntegration

- Ci2-Hudson.java – extends Ci0-ContinuousIntegration

- Ci3-ThoughtworksGo.java – extends Ci0-ContinuousIntegration

- Ci4-Bamboo.java – extends Ci0-ContinuousIntegration

Now we can put the common scoring code in Ci0-ContinuousIntegration.java

and the other classes will only contain the scoring code that pertains to that unique server.

Spreadsheet Reporting

The existence of an or-grouping in a SMOSLT.options run alerts the SMOSLT.analytics module that you care about comparing various Continuous Integration options.

So the SMOSLT.analytics module prepares a separate tab in the spreadsheet document, just to compare those options. It names this tab, appropriately, “Continous Integration”

Or-Group Score Summarization?

As mentioned above, you have two primary issues when looking at Continuous Integration for your project.

- Should I even do Continuous Integration at all, or devote resources to something else?

- If I do, which of the many attractive servers should I choose to implement?

SMOSLT.analytics will prepare a spreadsheet with potentially many sheets to help you with this and other options. As stated above, it will even prepare a tab within that spreadsheet to help you with number 2 – choosing between or-group options

The analytics piece does NOT, however, help you aggregate or summarize Continuous Integration servers for number 1. If you give it 4 or-group options, it will show each individually, making your spreadsheet potentially harder to re read when deciding whether to commit resources to Continuous Integration or some other option. For that reason, you may wish to make a series of separate runs. Try this sequence, for example.

- Pick Ci1-Jenkins alone, in your first runs, to compare what happens when you commit resources to Continuous Integration versus committing resources to other options such as Docker Deployments.

- Once you’ve decided that Continuous Integration is probably going to be included in your project plan, then make some more runs with each of the rest of the CI 0r-group included. That will let you compare various CI servers and make a final decision

SMOSLT.options – Ordering By Name Feature

Sometimes sequence of options matters.

Example:

- Evaluate Continuous Integration

- Evaluate …A

- Evaluate …B

Might not produce the same comparison as

- Evaluate …B

- Evaluate …C

- Evaluate Continuous Integration

To solve this problem and others – use prefixing

Aa-MyOptionOne

Ab-MyOptionTwo

This makes option MyOptionOne always evaluate before MyOptionTwo

To really explain this you might need a lot more detail about what actually happens in a scoring module, for example the elimination of creation of future tasks.

You also need to explain how this does and does not integrate with or-group naming

You also need to explain that this is all visible in the IDE

You need to explain that they are going to have to expect to refactor names frequently so don’t fall in love with any name prefix like Bjc because it might need to be Kdd later to rework a sequence later

This also exemplifies the higher order rule that says there’s a lot of stuff we do in the IDE that other apps would do in much more refined and elegant ways. We could do them in refined and elegant ways too, but it would require additional levels of abstractions that we’re too cheap to do for free.

You also need to explain that it is permanent, and you can’t adjust it. This both solves, and causes lots of potential problems. You might sometimes wish to adjust option sequence. But to write the program to allow that would have so many consequences that it would break the KISS principle as relates to this software.

SMOSLT.options Module

The SMOSLT options feature is what runs your assumptions against your schedule with the options that you choose to compare with each other.

The Options Module

In the codebase it goes by the name of smoslt.options

For the rest of this document, we will refer to this module as simply “options” or “options feature”.

The options module, like all other modules in the SMOSLT system, is an OSGi bundle or jar, and has almost all other SMOSLT jars as dependencies. It also has optaplanner as a dependency.

Everything else in the SMOSLT codebase is deterministic by comparison. The options feature allows you to compare options that are not even realistic to compare in a more deterministic way. The options feature runs on the optaPlanner backbone, which allows you to compare combinations of options which are NP-Complete by their very nature.

NP-Complete mathematics is too big a topic to discuss here, but that’s OK – because it’s amply covered in wikipedia here.

How Does It Work?

The options feature is designed to be almost completely decoupled from the rest of the SMOSLT codebase.

The options module maintains no awareness of SMOSLT.stacker or how it does it’s job. The options module thinks of it’s options as an ordered list of option names. It’s job is to simply toggle on or off different combinations of options, and then hand back a list of options that are toggled on to get a score – in this case, from SMOSLT.stacker.

Example:

options is given these 5 options, in this order.

- option1

- option2

- option3

- option4

- option5

First, it might toggle only option2 and option5 on, and then tabulate the score for that. To do this, it hands off a String “option2 option5” to SMOSLT.stacker, which returns a score of “-217, -486, -344”

So now the options database has this information stored in it:

“option2 option5” = “-217, -486, -344”

The options module continues this process, gathering scores persisting them, for every combination of 1 to 5 options that the options module is interested in. When it is time to terminate the run, the options module has gathered a handy little database of many different combinations of options, and a score for each combination.

The options module neither understands, nor cares about, how it’s scores are arrived at. But it does care about the scores themselves. More about that below.

Why Does SMOSLT.options Module Care About Score?

Since the options module does NOT care about HOW the score was achieved, why does it care about the score itself?

Imagine that the example above was more typical of a real software project, and that instead of 5 options, the real list was more like 100 or more options. Calculating every combination of this many options might be more work than you would wish to throw computing power at.

For optaPlanner to do it’s job, it needs to use the score to evaluate which combination of options to try next. If, for example, option3 and option5 in combination has so far always yielded an unworkable score, then it might decide not to keep trying those two in combination with other options, or at least to not try that combination until other more promising combinations of options were scored first.

What Else Does SMOSLT.options Database Persist, and Why?

Shown in the example above, every combination and score would be persisted for you to do analytics with, using another tool. So it might look like this:

“option2 option5” = “-217, -486, -344”

“option2 option3 option5” = “-313, -486, -344”

“option1 option4” = “-682, -108, -751”

This would be sufficient for you to take into the SMOSLT.analytics piece and summarize your options in charts and graphs, but once you used the various charts and graphs to analyze your best options, you still might wish to know the actual schedule that SMOSLT.stacker used to calculate your score.

For that reason, a binary of the schedule is also persisted with each score. So the real persistence table looks likes this:

“option2 option5” = “-217, -486, -344”, [binary]

“option2 option3 option5” = “-313, -486, -344”, [binary]

“option1 option4” = “-682, -108, -751”, [binary]

What Do The Various Score Numbers Mean?

The first [n] numbers are pass-fail. Only zero is a pass. Everything below zero is a fail.

The rest of the numbers are soft criteria. The only thing that the options module cares about is their size (the closer to zero, the better).

To the options modules, these are just numbers. To the stacker and analytics modules, these represent real concerns.

Related Information

- smoslt.stacker module

- smoslt.analytics module